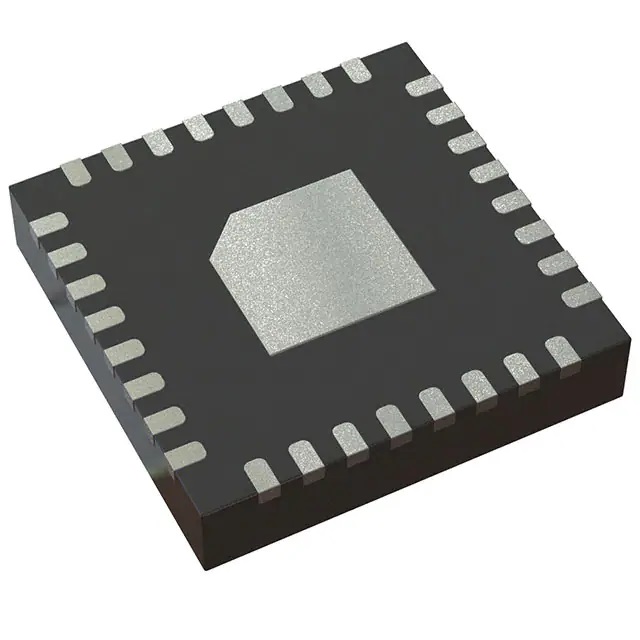

XC7Z020-2CLG484I New Original Electronic Components Integrated Circuits BGA484 IC SOC CORTEX-A9 766MHZ 484BGA

Product Attributes

| TYPE | DESCRIPTION |

| Category | Integrated Circuits (ICs) |

| Mfr | AMD Xilinx |

| Series | Zynq®-7000 |

| Package | Tray |

| Standard Package | 84 |

| Product Status | Active |

| Architecture | MCU, FPGA |

| Core Processor | Dual ARM® Cortex®-A9 MPCore™ with CoreSight™ |

| Flash Size | - |

| RAM Size | 256KB |

| Peripherals | DMA |

| Connectivity | CANbus, EBI/EMI, Ethernet, I²C, MMC/SD/SDIO, SPI, UART/USART, USB OTG |

| Speed | 766MHz |

| Primary Attributes | Artix™-7 FPGA, 85K Logic Cells |

| Operating Temperature | -40°C ~ 100°C (TJ) |

| Package / Case | 484-LFBGA, CSPBGA |

| Supplier Device Package | 484-CSPBGA (19×19) |

| Number of I/O | 130 |

| Base Product Number | XC7Z020 |

Communications is the most widely used scenario for FPGAs

Compared to other types of chips, the programmability (flexibility) of FPGAs is highly suited to the continuous iterative upgrading of communication protocols. Therefore, FPGA chips are widely used in wireless and wired communication devices.

With the advent of the 5G era, FPGAs are rising in volume and price. In terms of quantity, due to the higher frequency of 5G radio, to achieve the same coverage target as 4G, approximately 3-4 times the number of 4G base stations are required (in China, for example, by the end of 20, the total number of mobile communication base stations in China reached 9.31 million, with a net increase of 900,000 for the year, of which the total number of 4G base stations reached 5.75 million), and the future market construction scale is expected to be in the tens of millions. At the same time, due to the high concurrent processing demand of the whole column of large-scale antennas, the FPGA usage of 5G single base stations will be increased from 2-3 blocks to 4-5 blocks compared to 4G single base stations. As a result, FPGA usage, a core component of 5G infrastructure and terminal equipment, will also increase. In terms of unit price, FPGAs are mainly used in the baseband of transceivers. The 5G era will see an increase in the scale of FPGAs used due to the increase in the number of channels and the increase in computational complexity, and as the pricing of FPGAs is positively correlated with on-chip resources, the unit price is expected to increase further in the future. FY22Q2, Xilinx’ wireline, and wireless revenue increased by 45.6% year-on-year to US$290 million, accounting for 31% of total revenue.

FPGAs can be used as data center accelerators, AI accelerators, SmartNICs (intelligent network cards), and accelerators in network infrastructure. In recent years, the boom in artificial intelligence, cloud computing, high-performance computing (HPC), and autonomous driving has given FPGAs new market impetus and catalyzed incremental space.

Demand for FPGAs driven by AI accelerator cards

Due to their flexibility and high-speed computing capabilities, FPGAs are widely used in AI accelerator cards. Compared with GPUs, FPGAs have obvious energy efficiency advantages; compared with ASICs, FPGAs have greater flexibility to match the faster evolution of AI neural networks and keep up with the iterative updates of algorithms. Benefiting from the broad development prospect of artificial intelligence, the demand for FPGAs for AI applications will continue to improve in the future. According to SemicoResearch, the market size of FPGAs in AI application scenarios will triple in 19-23 to reach US$5.2 billion. Compared to the $8.3 billion FPGA market in ’21, the potential for applications in AI cannot be underestimated.

A more promising market for FPGAs is the data center

Data centers are one of the emerging application markets for FPGA chips, with low latency + high throughput laying the core strengths of FPGAs. Data center FPGAs are mainly used for hardware acceleration and can achieve significant acceleration when processing custom algorithms compared to traditional CPU solutions: for example, the Microsoft Catapult project used FPGAs instead of CPU solutions in the data center to process Bing’s custom algorithms 40 times faster, with significant acceleration effects. As a result, FPGA accelerators have been deployed on servers in Microsoft Azure, Amazon AWS, and AliCloud for computing acceleration since 2016. In the context of the epidemic accelerating the global digital transformation, the future data center requirements for chip performance will further increase, and more data centers will adopt FPGA chip solutions, which will also increase the value share of FPGA chips in data center chips.